Are you investing in generative artificial intelligence but feeling uncertain about the return? Many companies face the challenge of validating the real performance of these complex models in their daily operations.

Without rigorous evaluation, you run the risk of implementing solutions that do not meet your expectations, generating unnecessary costs and frustrating teams and customers.

Discover how you can transform this uncertainty into a competitive advantage, ensuring that your generative AI delivers concrete results and optimizes your business processes.

The Challenge of Generative AI Evaluation for Businesses

You know that the proliferation of Generative AI demands robust evaluation methods. Companies rely on these advanced systems for critical operations, from customer support to complex data analysis.

You need to understand the true capabilities and limitations of these models for strategic implementation. This avoids wasting resources and ensures that technology works in your favor.

Achieving optimal Generative AI performance in your use cases requires rigorous evaluation. Without standardized LLM benchmarks, you risk implementing models that fail operational requirements.

This can lead to inefficiencies, increase your costs, and compromise results. You avoid these problems by adopting a clear and objective evaluation framework.

LLM benchmarks offer an objective framework for evaluating large language models. You go beyond anecdotal evidence, obtaining quantifiable metrics to compare models on specific tasks.

For AI developers, these benchmarks highlight areas for improvement. They guide your architectural decisions, allowing continuous model enhancement with a focus on results.

Generic Metrics vs. Corporate Needs: A Crucial Analysis

Many companies question whether general metrics truly measure impact. You discover that traditional LLM benchmarks often fail to capture the nuance of complex business scenarios.

You need an evaluation that directly connects to business objectives. This means going beyond linguistic fluency and focusing on practical relevance and the value generated for your organization.

Construtora Bello, from Belo Horizonte, faced challenges using AI to generate technical proposals. Generic tools did not understand the specific industry terminology.

After implementing a benchmark focused on engineering terminology and compliance, Bello achieved a 25% increase in proposal accuracy. In addition, it reduced team review time by 10%.

This demonstrated that investing in specific evaluation resulted in a clear Return on Investment (ROI). You can also achieve similar results by aligning evaluation with your objectives.

Mastering LLM Benchmark Methodologies

You evaluate Generative AI performance through diverse methodologies. This includes both quantitative and qualitative assessments, ensuring a complete and in-depth view.

Quantitative metrics frequently focus on precision, coherence, and relevance in specific tasks. You apply them in text generation or summarization, comparing model outputs with real human-annotated data.

Qualitative evaluations, on the other hand, assess aspects like creativity and fluency. You measure the overall user experience, using expert review or A/B testing with end-users.

The choice of evaluation method depends on your specific use case and desired outcome. You align the methodology to obtain the most relevant insights for your operation.

Domain-specific LLM benchmarks are crucial. A model excellent in general knowledge tasks may fail when dealing with your industry’s jargon or complex compliance requirements.

Quantitative vs. Qualitative Evaluation: Balancing Precision and Context

You wonder which methodology to prioritize in your evaluation. While quantitative offers objective data, qualitative adds the layer of human perception and nuance that AI does not yet fully replicate.

Integrating both approaches is fundamental for you. Thus, you obtain a robust analysis that combines the coolness of numbers with the richness of subjective feedback, optimizing AI for your audience.

Clínica Vitalis, in São Paulo, sought to optimize its patient screening via chatbot. Initially, the benchmark focused only on the accuracy of responses to symptoms, a quantitative metric.

However, patients complained about the “coldness” of the interaction. Vitalis then added a qualitative evaluation of empathy and fluency, with human reviewers, increasing patient satisfaction by 15%.

This example shows that you should not neglect the quality of interaction. A good benchmark balances these factors, ensuring that AI not only gets it right but also engages and satisfies the user.

Step-by-Step: Building Your First Custom LLM Benchmark

You can create an effective benchmark by following a few steps. First, clearly define the business objectives for Generative AI in your company.

Next, select the most critical use cases and identify performance metrics. These should be aligned with your existing KPIs (Key Performance Indicators).

Collect and annotate a representative and diverse dataset. Ensure that it reflects the jargon and specific scenarios of your area of operation.

Perform comparative tests with different available LLM models. Record and analyze the results, identifying the strengths and weaknesses of each.

Iterate and refine your model and benchmark continuously. This process ensures that your Generative AI adapts to changes and maintains optimal performance over time.

Optimizing Use Cases with LLM Benchmarking: Tangible Results

You precisely calibrate Generative AI for your needs through in-depth LLM benchmarks. This ensures that each AI application delivers maximum value.

An AI Agent for customer service, for example, can be evaluated by response accuracy. You also measure sentiment detection and resolution time, ensuring effective and satisfactory interactions, including for multi-user WhatsApp management.

In content creation, benchmarks evaluate originality and consistency. You verify adherence to brand voice, ensuring that AI delivers high-quality content aligned with your objectives.

This targeted evaluation allows continuous improvement. You ensure that AI consistently provides high-level results, directly impacting marketing effectiveness and your brand’s reputation.

Generative AI offers transformative potential in various use cases. Customer support automation and content creation are prime examples of applications you can optimize.

Generative AI in Customer Service vs. Content Generation: Distinct Priorities

You deal with different priorities when using AI for service or content. In service, the urgency and accuracy of responses are crucial for customer satisfaction.

In content generation, creativity, originality, and adherence to brand voice become the pillars. You balance these factors to ensure engagement and brand recognition.

DaJu Online Store, from Curitiba, used AI to personalize product descriptions. Without benchmarking, descriptions were generic and failed to convert customers.

DaJu implemented a benchmark focused on engagement, SEO keyword usage, and click-through rate. As a result, it increased sales of AI-driven products by 18% and reduced content creation time by 12% by leveraging WhatsApp bulk sender capabilities.

This case shows that you must adapt your benchmark. The goal is to ensure that AI perfectly adjusts to your needs, whether to solve problems or to boost your sales.

Data Security and LGPD: Pillar of Trust in Generative AI

You need to prioritize data security when implementing Generative AI. Protecting sensitive information is crucial, especially when dealing with customer data.

The General Data Protection Law (LGPD) requires you to treat personal data rigorously. Ensure that your AI models and benchmarks are in full compliance with current legislation, especially when integrating with an official WhatsApp Business API.

Implement encryption and anonymization measures. You must strictly control access to training data and results generated by AI, protecting user privacy.

A robust benchmark includes security and compliance tests. You validate that AI does not expose confidential information, mitigating risks of leaks and legal penalties.

Customer trust in your Generative AI depends on your ability to protect their data. You establish this trust by adopting best practices in security and LGPD compliance.

Strategic and Financial Impact of LLM Benchmark

As a business strategist, you use evaluations for investment decisions. Understanding the comparative strengths of models helps you select the most suitable Generative AI.

This strategic evaluation minimizes risks and maximizes potential returns. You ensure that your AI investments bring significant and sustainable financial results.

You align resource allocation efficiently and data-driven. By mapping model capabilities to high-value use cases, you optimize the ROI of your AI initiatives.

Understanding the benchmark’s position allows you to assess competitive positioning. You identify opportunities to differentiate your offerings and fill market gaps, staying ahead.

Strategic insights from evaluation reports also assist in risk management. You develop contingency plans by understanding model limitations, protecting operations and customer experience.

ROI of Benchmarking: Transforming Costs into Strategic Investment

You can calculate the Return on Investment (ROI) of benchmarking. This clearly demonstrates the financial value that a robust evaluation process adds to your company.

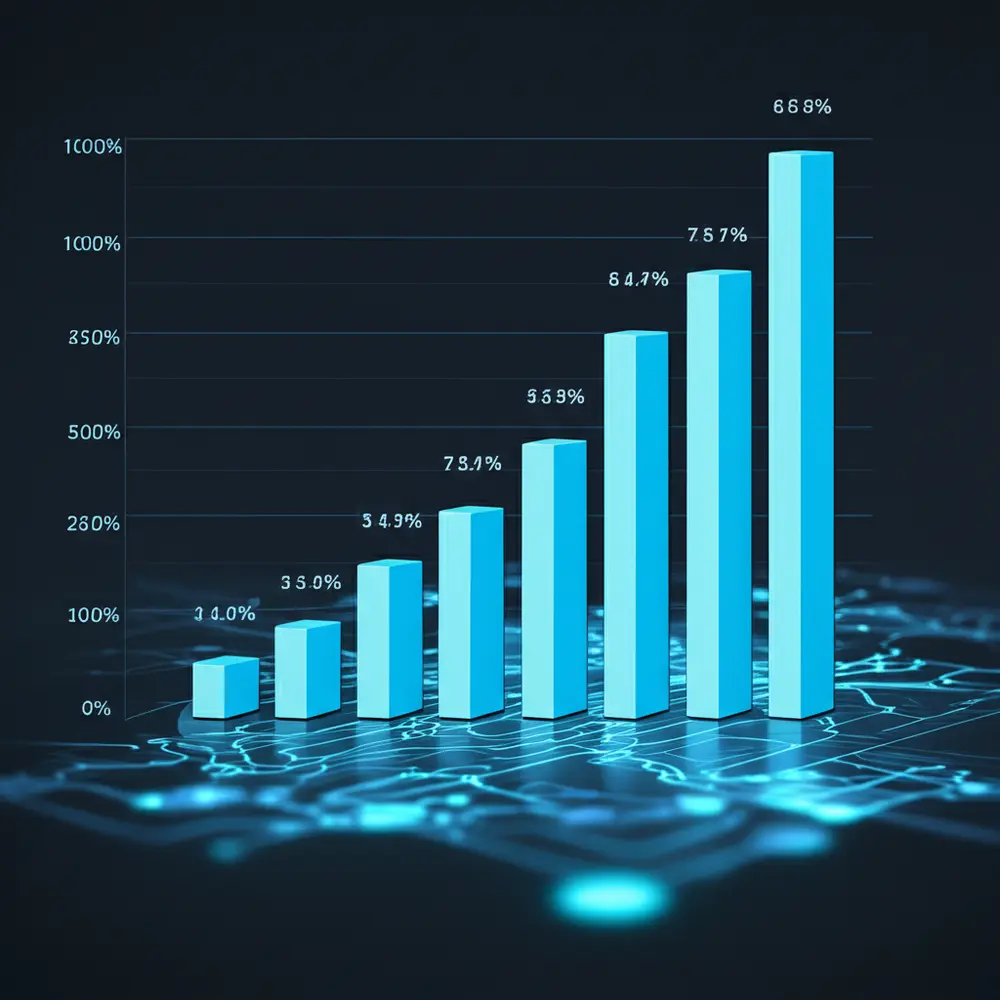

Consider a company that invested $10,000 in a benchmark tool and, with it, selected an LLM that reduced 15% of service costs, generating $50,000 in annual savings.

You calculate ROI as: (Investment Gain – Investment Cost) / Investment Cost.

In this example, the calculation would be: ($50,000 – $10,000) / $10,000 = $40,000 / $10,000 = 4. The ROI is 400%.

A study by Nexus Consulting indicates that companies with well-defined AI benchmarks experience an average increase of 22% in productivity and an 18% reduction in operational costs.

Transportadora Prime, from Manaus, used benchmarks to optimize routes with AI. It identified an LLM that, with the same initial cost, promised 20% more efficiency in logistics than other options.

The implementation resulted in a 15% reduction in fuel consumption and a 10% increase in on-time delivery. This translated into annual savings of $75,000 and a 650% ROI in the first year.

Challenges and the Future of LLM Benchmark: Ensuring Reliability and Ethics

The dynamic nature of LLMs presents continuous evaluation challenges. Generative AI performance evolves rapidly, requiring frequent updates to any LLM benchmark.

You face the management of annotation costs for various business tasks. This represents a significant obstacle in building comprehensive evaluation datasets.

Developing universal metrics for highly specialized use cases remains complex. The subjectivity in evaluating creative or strategic results adds a layer of difficulty.

Bias is another critical concern in LLM evaluation. Models trained on vast datasets can inadvertently inherit and amplify prejudices, generating discriminatory results.

This is problematic in sensitive use cases, such as hiring or lending. You must implement rigorous checks to ensure the fairness and reliability of your AI.

Bias and Ethics in Generative AI: How to Ensure Impartiality

You need to combat bias to ensure the ethics of your Generative AI. Models tend to reproduce prejudices present in training data, negatively affecting decisions.

A robust benchmark includes tests for fairness, representativeness, and ethical compliance. You ensure that AI performance is equitable for all demographic groups, building trust.

ConteMix Accounting Office, in Porto Alegre, used AI for credit application screening. It perceived a bias in favor of certain groups, resulting in customer dissatisfaction.

By refining its benchmark with impartiality metrics and diversity in training data, ConteMix reduced bias by 30%. This increased customer satisfaction by 10% and improved regulatory compliance.

You ensure that your AI is not only efficient but also fair and responsible. This is a crucial competitive differentiator in the current scenario, where ethics are as valued as technology.

The Importance of Specialized Support in LLM Benchmark

You need specialized support to implement complex benchmarks. The complexity of AI models and evaluation methodologies requires technical expertise.

Good support helps you set up datasets, interpret metrics, and optimize models. You ensure that your benchmarking process is efficient and produces actionable insights.

Experienced professionals can guide you in detecting and mitigating biases. They also assist in adapting benchmarks to constant regulatory and technological changes.

You avoid costly errors and accelerate the time to value of your AI. Relying on a partner who offers high-quality support is an essential strategic investment.

It allows you to keep your Generative AI always updated and performing. Ensure that it complies with market best practices and your specific needs.

Empowering AI Agents with Continuous Benchmarking

You know that the performance of AI Agents, such as those from Evolvy, depends on robust LLMs. An effective LLM benchmark directly optimizes these intelligent agents in your operation.

It ensures that they act reliably in various business use cases. You are certain that your autonomous agents will deliver consistent and accurate results.

By rigorously evaluating underlying LLMs, your AI Agents deliver superior results. This includes improved customer interactions and more precise data processing.

This continuous evaluation cycle is crucial for the advancement of AI Agent technology in your company. You keep your solution always at the forefront, ensuring competitiveness.

The LLM benchmark process is not static; it requires continuous evaluation. As models and tasks evolve, regular re-evaluation ensures constant optimization and relevance.

AI Agents Platforms: Choosing the Right Solution for Your Company

You need to choose the AI Agents platform that best suits you. Evaluate integration flexibility, scalability, and customization capabilities for your specific needs.

Prioritize solutions that offer support for continuous and transparent benchmarks. Thus, you ensure that your agents remain performant and aligned with your business objectives.

Nexloo, a technology company, implemented AI Agents from Evolvy for internal process automation. Initially, performance was good, but it fluctuated with model updates.

By adopting continuous benchmarking provided by Evolvy, Nexloo was able to monitor and optimize the agents. This resulted in 99% stability in task execution and a 20% increase in productivity.

By integrating these insights with AI Agents, you automate complex workflows. This increases efficiency and significantly improves the overall performance of Generative AI.

To optimize your AI capabilities and develop reliable AI Agents, visit evolvy.io/ai-agents/. You ensure that performance aligns with your strategic objectives.

You now understand that LLM benchmarking is indispensable in the Generative AI landscape. These rigorous evaluation processes are essential for discerning the real capabilities of language models.

Understanding a model’s strengths and weaknesses is vital. You decide if an LLM is truly suitable for your use cases, preventing wrong investments in AI solutions.

For developers and strategists, precise LLM benchmark data offers clarity. You make informed strategic decisions about model selection and resource allocation in complex projects.

Continuous evaluation frameworks are fundamental for innovation. You ensure that Generative AI performance meets operational demands and consistently delivers business value.

As your company explores advanced applications, such as AI Agents, the precision of LLM benchmarking becomes even more critical. You ensure optimal performance for autonomous systems.

Platforms that create sophisticated AI Agents, such as Evolvy, depend heavily on these evaluations. They validate the agent’s intelligence and reliability in real business scenarios.

You adopt an effective LLM benchmark strategy, becoming a competitive differentiator. You harness the power of Generative AI, transforming data into actionable insights and maintaining market leadership.

Therefore, you invest in precise evaluation methodologies and apply them consistently. This approach prepares your AI investments for the future, ensuring they contribute to your strategic objectives.